In my previous article, I outlined the ICO’s findings about AI recruitment tools and the 296 recommendations they made to providers. Several defence contractors have asked me: “What should we actually be doing about this?”

I wanted to share a practical answer.

Your AI service provider may not volunteer this information

The ICO audits were consensual. Providers volunteered to be assessed. Even with that cooperation, the findings revealed significant compliance gaps.

If those participating in good faith had these problems, what about the ones who didn’t volunteer?

Your legal liability doesn’t end when you outsource to an AI system. Under the Equality Act 2010, if your recruitment process discriminates, even through an algorithm you didn’t build, you’re accountable.

Compensation is uncapped. Serious cases result in awards of around £50,000. The Equality and Human Rights Commission is actively supporting claimants, as we saw in the Manjang case.

You need answers before problems arise, not after they occur.

Eight questions your AI system provider needs to answer

Based on the ICO’s findings and current UK employment law, here are the conversations you need to have with your providers:

1. What’s your lawful basis for processing under UK GDPR?

This isn’t technical it’s fundamental. The ICO found some providers potentially operating without valid legal grounds. Your provider should clearly state whether they’re relying on legitimate interests, consent, contract, or another basis. If they can’t articulate this, that’s a red flag.

2. How have you tested for bias across UK demographic groups?

“We’ve tested for bias” isn’t sufficient. You need specifics. What demographic groups did they test? What were the actual results across age, gender, race, and disability? How often do they conduct these audits, and who does them, is it an internal team or an independent auditor?

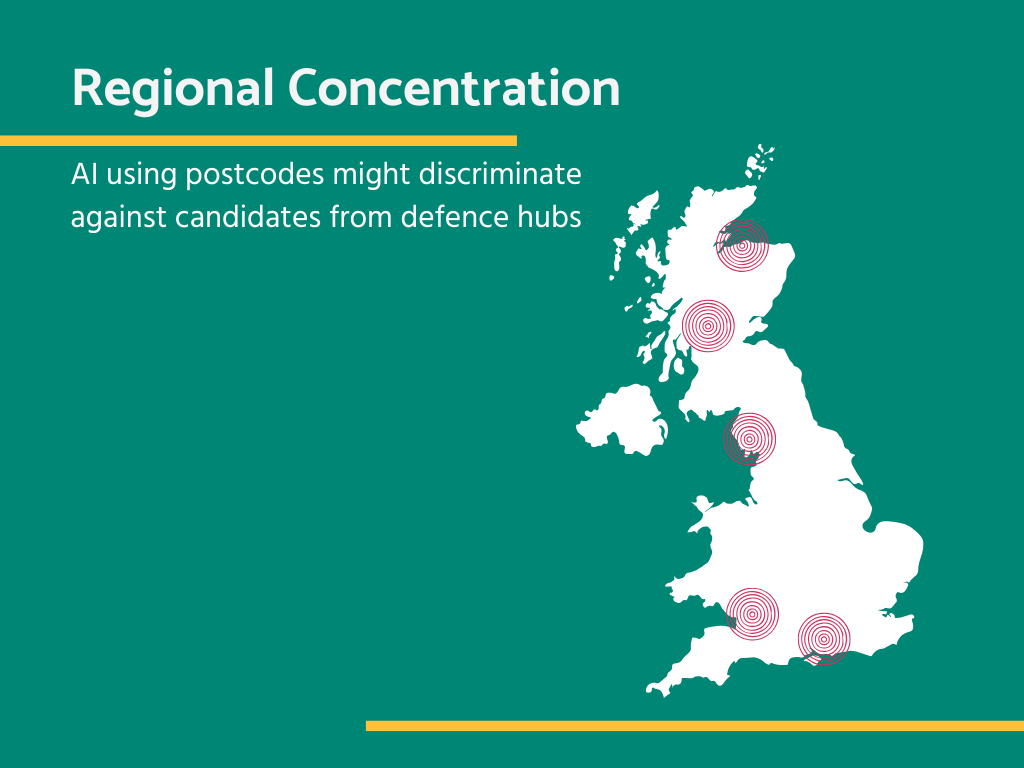

The ICO found many providers lacked adequate bias testing. Don’t assume your AI system is different. For defence contractors, there’s an additional bias risk: regional discrimination. AI systems trained on postcode data may inadvertently filter out candidates from defence industry hubs such as Barrow-in-Furness, Portsmouth, Plymouth, and the Scottish shipyards. If your system uses location as a screening factor, it may be systematically excluding exactly the candidates with relevant sector experience, and doing so in a way that may constitute indirect discrimination on the basis of place of origin.

3. Does your system recognise UK-specific qualifications and career patterns?

Most AI systems are trained on US data. You need to ask explicitly whether it recognises HNCs, NVQs, and UK apprenticeships. Does it understand MOD contractor experience and security clearance processing times? How does it handle the project-based work patterns common in defence?

If the system doesn’t understand UK defence sector careers, it will filter out your best candidates before you ever see them.

4. What data are you collecting, and why do you need it?

The ICO found providers scraping LinkedIn and social media, collecting far more data than necessary. You need to know what specific data points they’re collecting and what the justification is for each one. Are they scraping social media or job networking sites? How long do they retain candidate data, and what happens to it after the hiring decision?

If they’re collecting more than CV and application data, push them to explain why.

5. Can you explain individual decisions to candidates?

Under UK GDPR, candidates have the right to understand automated decisions. Your provider should be able to explain why a candidate was rejected, what criteria were used, and how the algorithm reached its decision.

If they claim proprietary algorithms prevent explanation, that’s a problem. The law doesn’t exempt you because your service provider wants to protect trade secrets.

6. How do you handle reasonable adjustments for disabled candidates?

The Equality Act requires reasonable adjustments, but AI systems often fail to make them. Video interview tools that assess facial expressions can disadvantage some disabled candidates. Timed assessments can disadvantage neurodiverse candidates. Systems that analyse tone of voice or communication patterns create barriers for others.

Your service provider must demonstrate how they ensure disabled candidates aren’t disadvantaged. “We treat everyone the same” is indirect discrimination if the system creates barriers.

7. What’s your process when a candidate challenges a decision?

The ICO criticised the lack of clear challenge processes. You need to know whether candidates can request human review, what the timeline is for challenges, who conducts the review, and how they ensure the review is genuinely independent from the algorithm. If there’s no meaningful challenge process, you’re exposed when discrimination claims arise.

8. Who’s liable if a discrimination claim succeeds?

Read your contract carefully. Many AI service providers position themselves as “tools” that you control, shifting liability entirely to you. Some contracts explicitly state they’re not liable for discriminatory outcomes.

If your service provider won’t share liability, they should at least provide professional indemnity insurance covering discrimination claims, evidence of their compliance measures, and a commitment to cooperate in your defence if claims arise.

What “We’re compliant” actually means

Many AI service providers will tell you they’re compliant with UK GDPR and equality law. That’s not enough. The ICO made 296 specific recommendations to supposedly compliant providers.

Ask to see independent audit reports, not just their own assessments. You want bias testing results showing actual data, not just assurances. Make sure they’ve done UK-specific testing, not just US or global. And ask for evidence of changes they’ve made following the ICO investigation.

If they can’t provide documentation, they’re asking you to take a significant legal risk on trust.

Defence Sector Complication

You face additional challenges that mainstream recruitment doesn’t:

Security clearance eligibility. You legitimately need to consider nationality for cleared roles. But how does the AI apply this? Does it filter all non-UK nationals at the CV stage, potentially discriminating by race?

Small candidate pools. You might receive 30 applications for a critical role, not 300. Algorithmic errors could eliminate your only qualified candidate.

Long-term relationships. Defence is a small world. If AI wrongly rejects someone who then joins a competitor, you’ll encounter them again. Reputational damage lasts.

What I’m seeing

Since the ICO report, I’ve heard that multiple contractors have started reviewing their AI recruitment tools. Some have already switched back to human-led screening. These are not technophobes. They’re risk managers who recognise that £50,000 discrimination awards, combined with reputational damage in a tight talent market, aren’t worth the efficiency gains.

The alternative

This isn’t about rejecting technology entirely. AI can be useful for generating ideas and brainstorming approaches. But there’s a difference between using AI as a tool to enhance human judgment and outsourcing the entire decision to an algorithm.

At The Thrive Team, we assess candidates personally. That means when we recommend someone, we can explain exactly why. We can justify our decisions. We can have conversations about our assessment. If a candidate challenges our assessment, we can discuss it. If an employer questions our recommendation, we can justify it. That’s accountability.

An AI system provider might tell you their algorithm is sophisticated. But can they explain why it rejected your best candidate? Can they demonstrate it understands UK defence careers and won’t discriminate? If you’re using AI recruitment tools in defence or engineering, these questions are your due diligence. Get answers in writing.

Connect with me, Martin Grady, on LinkedIn to discuss AI system assessment and compliant recruitment strategies.